The Changing Landscape of educA.tI.on

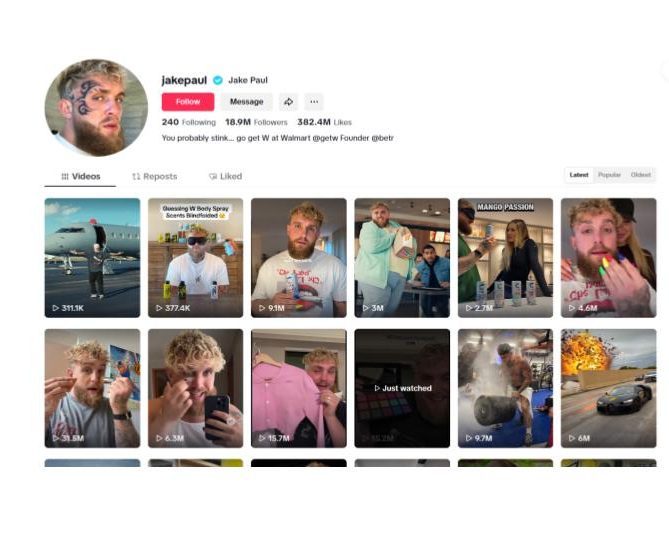

Last week, a Tik tok of a famous influencer and professional boxer, Jake Paul, doing a “get ready with me” went viral. The Tik Tok featured Jake Paul applying makeup and finally “coming out” and revealing his “true” identity to the world, shocking millions of viewers.

As a result, social media buzzed with thousands of reactions and debates about this hot topic just until the creator of the Tik Tok declared the video as AI, and a product of the newest AI software, Sora 2.

This isn’t the only time AI has doomed us, about 2 months ago,on Tik Tok, another AI video, this time, of bunnies jumping on a trampoline also went viral, convincing millions of people that it was real.

While this clearly foreshadows a highly technologically advanced future with hundreds of opportunities, does this also serve as a warning sign? Is AI reducing our ability to distinguish the truth?

With the consistent releases of new AI software, becoming more realistic each time, it is becoming dangerously difficult to differentiate reality from AI. Some AI software, such as Sora 2, is now able to create sound to videos and impersonate voices.

Although this new tool can be used for many useful purposes and even light-hearted entertainment, it can also be harshly abused.

Scammers can utilize this tool to impersonate people’s voices and scam others much more easily. Kidnappers are easily able to generate AI videos of kids and use those to manipulate parents. People are able to AI generate and post anything.

Without proper restrictions and regulation, deception will flood our lives, as the truth will become a relic.

AI is already used for much more than generating cute animal images and relaxing ASMR videos of cutting open planets.

According to Virginia Tech, algorithm recommendations, companies creating new ways to maximize profit and engagement, and basic human decision making all heavily utilize AI. That is correct, AI is being used to decide what people should eat for dinner, or what Labubu to buy next.

It doesn’t stop here. According to Loma Linda University Health, 28% of people, all different ages, are relying on AI for quick support or therapeutic matters. This is extremely dangerous because most AI chatbots are not programmed for this purpose.

An experiment conducted by Stanford University tested 5 different AI chatbots on their ability to provide effective and unbiased feedback on many different mental health issues. Across all AI chatbots, the feedback showed stigmatization, lack of ability to detect suicidal behavior, and great bias toward gender and race.

Similar studies show increasing amounts of people developing emotional dependency on AI. Yes, many chatbots are designed to imitate human-like behavior such as providing comfort and reassurance, however, unlike humans, that behavior is not authentic.

It is vital to remember the difference between actual human interactions versus interactions with a programmed software that is intended to imitate human behavior.

Not only should we be Mindful of AI usage, we should also consider the detrimental effects of AI on our permanent home, Earth.

According to the UN Environment Program, “Most large-scale AI deployments are housed in data centres, including those operated by cloud service providers”. These data centers not only consume vast amounts of electricity and water, which fastens the emission of greenhouse gases, but also heavily rely on rare Earth minerals which are mined unsustainably.

These data centers also produce waste products like lead and mercury which are further harmful for our environment and planet, which is already suffering from climate change.

Not being responsible with AI usage will continue to harm our environment, Ignoring the environmental effects of AI won’t lead to progress, but only pollution disguised as innovation.

Although AI can certainly be utilized in many great ways such as tutoring help for students (with fact checking), inspiration and ideas, creating schedules or lesson plans, and even ethical entertainment, it is imperative that we are mindful with our usage of AI.

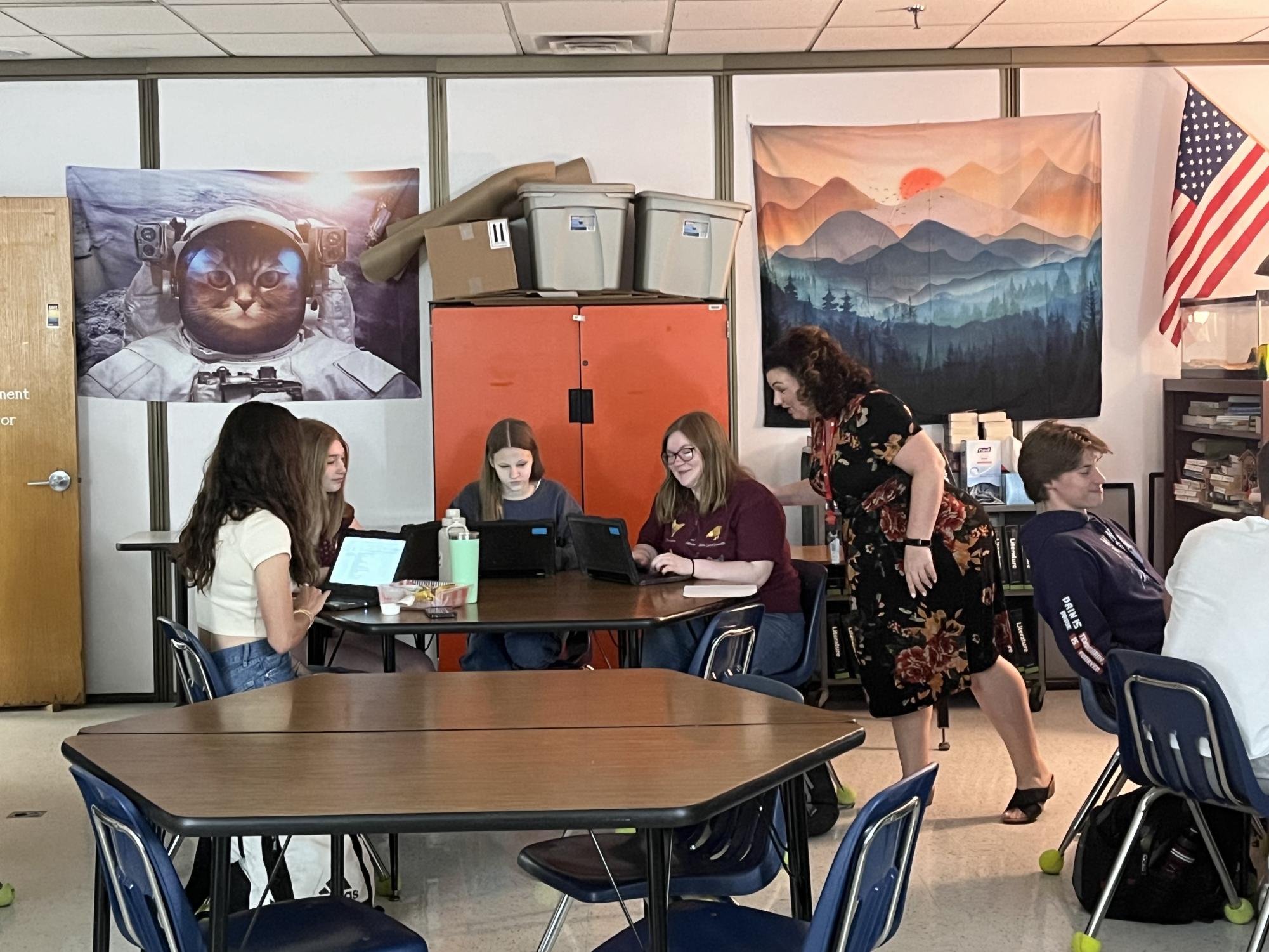

Like any other students across the country, students at Wheeling Park High School are forbidden from using AI for any assignments or projects.

“The biggest difference that AI has made in schools is that students are no longer comfortable engaging in productive struggle”, said Mrs. Johnson, English teacher at Wheeling Park. Teachers have set clear boundaries for AI, with violations resulting in serious consequences including completely failing the assignment.

“Skipping the important step of productively struggling and then applying their own critical thinking skills to solve those struggles robs students of needed growth and self-confidence” said Johnson.

While students are restricted to use AI, the same technology is being used in the creation of assignments and assessments, displaying the growing role of AI in Education. This difference raises the concerns about fairness and equality.

Students and Teachers at Wheeling Park share their opinions on the growing use of AI in education.

“I definitely think that AI can be used in a good way in schools. It does help students with looking at individual steps of a problem, specifically in Chemistry” said Mrs. Redilla, teacher and the science department chair at Park.

“I think it is a very helpful tool to use on assignments, but AI generated tests are really bad, because the teachers have to resolve some questions to get correct answers” said Josh Hess, a junior.

Students believe that AI is a very helpful tool in schools, not just for inspiration, but also for breaking down problems and trying to understand them in steps.

“I feel like students should be able to use it to study, for step-by-step guides to understand complex topics that they are struggling with” said Donovan Ramos, junior.

Most teachers and students think AI is a great tool that can enhance learning if used responsibly, but should be utilized with fact-checking and some type of regulation.

AI can be used in unlimited ways, both good and bad. Its boundary in education, however, is an ongoing debate. What should be allowed and what shouldn’t?

“In terms of boundaries, I don’t think students should be able to use AI until they have proven that they can do the critical thinking on their own” said Johnson.

“A common argument I receive in my reluctance to use AI, is that teachers also “freaked out” when calculators arrived in schools” said Johnson.

“My counter argument is, we don’t allow students to use calculators until they can prove that they have mastered the mathematical concepts that a calculator helps them process–so elementary age students don’t use calculators, but middle school students do” said Johnson.

“I don’t think getting rid of it is the answer, because it will continue to pop up and people will use it on the down low, like phones, so AI can definitely be helpful but it needs to be regulated for maximum efficiency” said Taylor Miller, senior.

The excess of anything is bad. This is why a clear limit or regulation for the use of AI is so crucial at this time.

No, the goal isn’t to ban AI and discourage others from using it. AI can be used in countless incredible ways, but the way it is used matters. It is important to know the difference between healthy and not-healthy usage of AI. The need for specific guidelines and regulations is essential for equality, and the safety of us and our future generations.

Your donation will support the student journalists of Wheeling Park High School. Your contribution will allow us to purchase equipment and cover our annual website hosting costs.